Detailed Analysis method of Conversion Failures

Overview

In this step, we will introduce detailed procedures for extracting and analyzing information about layers that failed conversion from AcuiRT's output for DETR.

This tutorial records the results of actual refactoring performed in the environment described in the Execution Environment. However, conversion results may vary depending on the versions of TensorRT and PyTorch, or the generation and model of the GPU used.

Checking output report

Check the report generated in the previous step and analyze the cause of the conversion failure. When you run test.py the report and TensorRT engine files are output to the path specified by --trt-engine-dir. With the sample script, they are output to exps/baseline. The output contents are as follows.

workflow_report.json

This includes accuracy/latency and conversion rate. It is useful for performance comparison after applying AcuiRT and for an overall overview.

-

conversion_rate: A tuple containing the number of nn.Modules converted to a TensorRT engine by AcuiRT and the total number of nn.Modules. This indicates how many nn.Modules AcuiRT was able to convert to a TensorRT engine. Ideally, the number of converted nn.Modules matches the total number of nn.Modules. -

num_modules: The total number of TensorRT engines generated by AcuiRT during conversion. Ideally, all nn.Modules are converted into a single TensorRT engine, so this value will be “1”. -

performance: Contains data on the overall model's accuracy and latency when using AcuiRT. -

non_converted_performance: Contains the Accuracy/Latency when using a PyTorch model.workflow_report.json{

"conversion_rate": [

73,

447

],

"num_modules": 63,

"performance": {

"accuracy": {

"AP": 0.41445725757137836

},

"latency": "66.11 ms"

},

"non_converted_performance": {

"accuracy": {

"AP": 0.5310819473995319

},

"latency": "60.92 ms"

}

}

In the DETR example, the following can be seen from this report.

-

When checking

num_modulesinworkflow_report.json, it is 63 instead of the ideal 1. This means that the DETR model is split into multiple TensorRT engines. -

Also, focusing on the

conversion_rate, we find that only about 16% of the layers can be computed on TensorRT. In this state, roughly 80% of the computation is being executed on PyTorch. Therefore, there is room for optimization.

statistics.csv

This is statistical data aggregated by class, conversion failure/success status, and error. It helps in understanding error trends and identifying bottlenecks.

-

Example of

statistics.csvstatus error num_errors module_class_name module_code_filename module_code_line device_time_total cpu_time_total device_events_total cpu_events_total used_named_modules 'failed' "Failed to export the model with torch.export. \x1... 2 'DETR' 'detr/models/detr.py' 21 3.744 133389 3 3 "['']" 'failed' "Failed to export the model with torch.export. \x1... 1 'Joiner' 'detr/models/backbone.py' 96 0 117218 0 3 "['backbone']" 'failed' "Failed to export the model with torch.export. \x1... 2 'Backbone' 'detr/models/backbone.py' 83 53.823 117077 3 3 "['backbone.0']" 'failed' 'Dynamic shape loading is not implemented yet.' 1 'IntermediateLayerGetter' 'detr/.venv/lib/python3.12/... 13 0 117027 0 3 "['backbone.0.body']" 'failed' 'Dynamic shape loading is not implemented yet.' 105 'Conv2d' 'detr/.venv/lib/python3.12/... 374 0 59274.4 0 315 "['input_proj', 'backbone.0.body.conv1', 'backbone... 'failed' 'Dynamic shape loading is not implemented yet.' 208 'FrozenBatchNorm2d' 'detr/models/backbone.py' 19 32142.6 30941.7 312 312 "['backbone.0.body.bn1', 'backbone.0.body.layer1.0... 'failed' 'Dynamic shape loading is not implemented yet.' 68 'ReLU' 'detr/.venv/lib/python3.12/... 105 10230.9 10230.9 300 300 "['backbone.0.body.relu', 'backbone.0.body.layer1.... 'failed' 'Dynamic shape loading is not implemented yet.' 2 'MaxPool2d' 'detr/.venv/lib/python3.12/... 157 1187.73 1187.73 3 3 "['backbone.0.body.maxpool']"

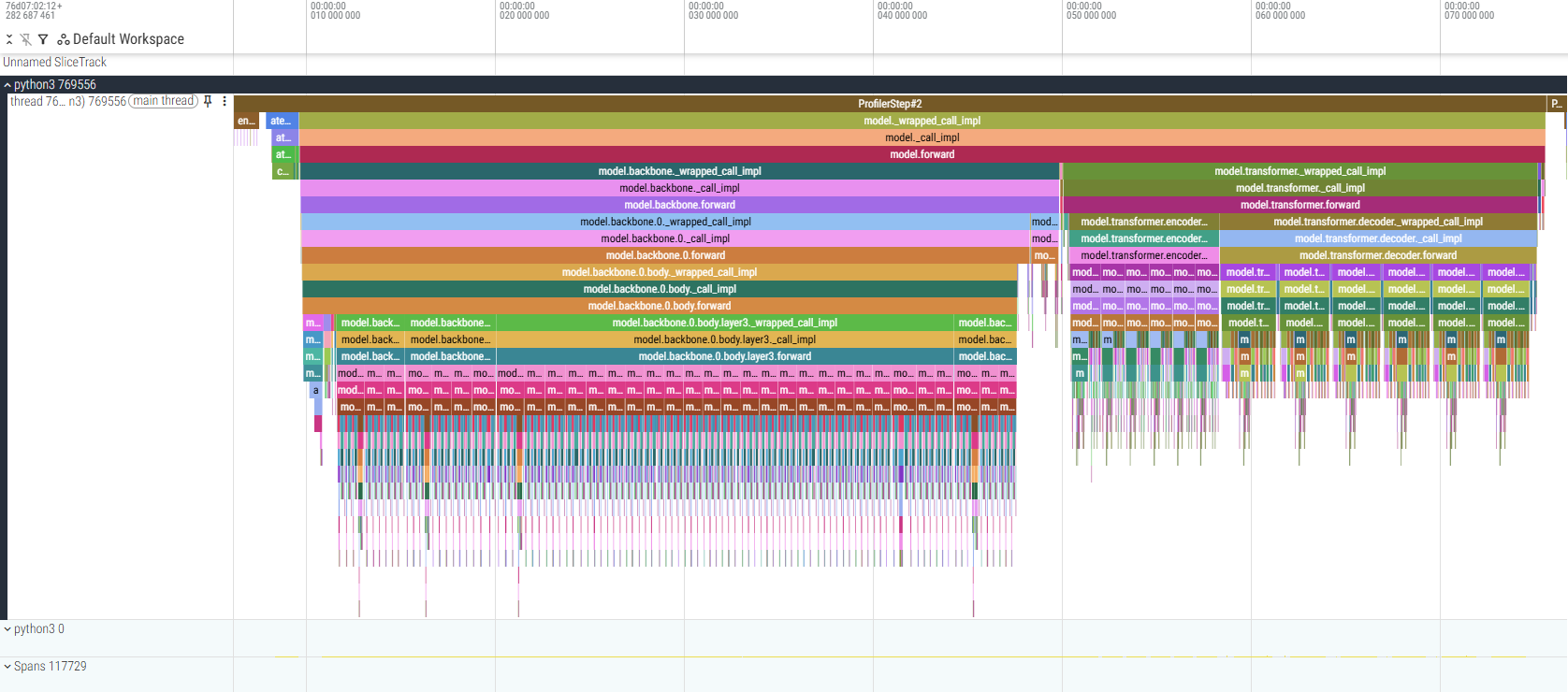

trace.json

This is the trace results from the PyTorch Profiler. Using perfetto, you can visualize each module’s inference time and proportion.

-

Example visualized with perfetto

conversion_report.json

Outputs the success or failure of the conversion, error details (traceback), configuration options, etc. It is useful for checking the current status.

{

"": {

"rt_mode": null,

"children": null,

"input_shapes": null,

"input_args": null,

"class_name": "DETR",

"module_name": "models.detr",

"status": "failed",

"error": "Only tuples, lists and Variables are supported as JIT inputs/outputs. Dictionaries and strings are also accepted, but their usage is not recommended. Here, received an input of unsupported type: NestedTensor",

"traceback": "Traceback (most recent call last):\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/converter/convert_auto.py\", line 70, in auto_convert2trt\n summary = CONVERSION_REGISTRY[conversion_mode](\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/converter/convert_onnx.py\", line 117, in convert_trt_with_onnx\n torch.onnx.export(**arguments)\n File \".venv/lib/python3.12/site-packages/torch/onnx/__init__.py\", line 375, in export\n export(\n File \".venv/lib/python3.12/site-packages/torch/onnx/utils.py\", line 502, in export\n _export(\n File \".venv/lib/python3.12/site-packages/torch/onnx/utils.py\", line 1564, in _export\n graph, params_dict, torch_out = _model_to_graph(\n ^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/onnx/utils.py\", line 1113, in _model_to_graph\n graph, params, torch_out, module = _create_jit_graph(model, args)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/onnx/utils.py\", line 997, in _create_jit_graph\n graph, torch_out = _trace_and_get_graph_from_model(model, args)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/onnx/utils.py\", line 904, in _trace_and_get_graph_from_model\n trace_graph, torch_out, inputs_states = torch.jit._get_trace_graph(\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/jit/_trace.py\", line 1500, in _get_trace_graph\n outs = ONNXTracedModule(\n ^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/nn/modules/module.py\", line 1736, in _wrapped_call_impl\n return self._call_impl(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/nn/modules/module.py\", line 1747, in _call_impl\n return forward_call(*args, **kwargs)\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packages/torch/jit/_trace.py\", line 106, in forward\n in_vars, in_desc = _flatten(args)\n ^^^^^^^^^^^^^^\nRuntimeError: Only tuples, lists and Variables are supported as JIT inputs/outputs. Dictionaries and strings are also accepted, but their usage is not recommended. Here, received an input of unsupported type: NestedTensor\n"

},

"transformer": {

"rt_mode": null,

"children": null,

"input_shapes": null,

"input_args": null,

"class_name": "Transformer",

"module_name": "models.transformer",

"status": "failed",

"error": "Dynamic shape loading is not implemented yet.",

"traceback": "Traceback (most recent call last):\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/converter/convert_auto.py\", line 70, in auto_convert2trt\n summary = CONVERSION_REGISTRY[conversion_mode](\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/converter/convert_onnx.py\", line 68, in convert_trt_with_onnx\n args, keywords = input_args[0]\n ~~~~~~~~~~^^^\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/variable_cache.py\", line 128, in __getitem__\n raise NotImplementedError(\"Dynamic shape loading is not implemented yet.\")\nNotImplementedError: Dynamic shape loading is not implemented yet.\n"

},

"transformer.encoder": {

"rt_mode": null,

"children": null,

"input_shapes": null,

"input_args": null,

"class_name": "TransformerEncoder",

"module_name": "models.transformer",

"status": "failed",

"error": "Dynamic shape loading is not implemented yet.",

"traceback": "Traceback (most recent call last):\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/converter/convert_auto.py\", line 70, in auto_convert2trt\n summary = CONVERSION_REGISTRY[conversion_mode](\n ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/converter/convert_onnx.py\", line 68, in convert_trt_with_onnx\n args, keywords = input_args[0]\n ~~~~~~~~~~^^^\n File \".venv/lib/python3.12/site-packagesaibooster/intelligence/acuirt/convert/variable_cache.py\", line 128, in __getitem__\n raise NotImplementedError(\"Dynamic shape loading is not implemented yet.\")\nNotImplementedError: Dynamic shape loading is not implemented yet.\n"

},

}

Identify the cause of conversion failure from the conversion report

When checking reports, conversion errors can be classified into the following three patterns.

1. Detection of Dynamic Shape

Error:

Dynamic shape loading is not implemented yet.

- Cause: When AcuiRT analyzed the input to the module, it detected that the shape is changing dynamically (Dynamic Shape).

- Background: DETR accepts arbitrary image sizes, but from an optimization (TensorRT conversion) standpoint, a fixed size is recommended.

2. Unsupported Input Type (NestedTensor)

Error:

<class 'ValueError'>: Unsupported input type <class 'util.misc.NestedTensor'>

- Cause: An error occurs because a custom class called

NestedTensoris being input when exporting to ONNX format. - Background: In ONNX conversion, inputs must essentially be

torch.Tensor(or a list/dictionary thereof). Custom objects cannot be interpreted.

3. Inappropriate output format (e.g., dictionary type)

Error:

'str' object has no attribute 'detach'

- Cause: It is due to an unexpected data structure (such as a dictionary) being returned in AcuiRT's internal processing.

- Background: The output of a TensorRT engine is a list of

torch.Tensors regardless of the original PyTorch implementation. Rich structures such as dictionaries do not conform to the specification.

In the next step, we analyze the failure reasons for each layer, refactor the code so that all DETR layers can be computed on TensorRT, and explain how to perform the optimization.