Basic Usage

Hyperparameter automatic tuning with ZenithTune consists of the following 4 steps.

1. Select a Tuner

Consider the tuning target and select one of the 4 tuners provided by ZenithTune.

- Want to optimize function execution time

- → FunctionRuntimeTuner

- Want to optimize command execution time

- → CommandRuntimeTuner

- Want to optimize specific values in command standard output

- → CommandOutputTuner

- Want to optimize a user-defined objective function

- → GeneralTuner

Each Tuner is imported and used as follows:

from zenith_tune import FunctionRuntimeTuner

tuner = FunctionRuntimeTuner()

2. Define Objective Function and Tuning Parameters

Define the objective function and tuning parameters as its local variables, and provide them to the Tuner's optimize function along with the number of trials. Use the Optuna Trial API provided through the first argument of the objective function to define tuning parameters. Refer to Optuna's API Reference for supported types and range definition methods.

def objective(trial, **kwargs):

x = trial.suggest_int("x", low=-10, high=10)

y = trial.suggest_int("y", low=-10, high=10)

# Processing dependent on x, y

return {cost_value}

tuner = GeneralTuner()

tuner.optimize(objective, n_trials=10)

FunctionRuntimeTuner and CommandRuntimeTuner automatically measure and optimize execution time, so there is no need to explicitly return a cost value. On the other hand, GeneralTuner requires the user to describe everything from parameter definition to cost value determination. CommandOutputTuner differs from other Tuners in that it defines the objective function as a pair of (1) command generation function and (2) standard output parsing function. CommandOutputTuner reconstructs a new objective function from (1) and (2) to execute tuning. See the following explanations for each Tuner for details. Also, check Tuning Tips: Metadata Available from Objective Function for keyword arguments of the objective function.

FunctionRuntimeTuner

FunctionRuntimeTuner optimizes function execution time. Users define a function dependent on hyperparameters and pass it as the first argument to the optimize function.

def func(trial, **kwargs):

# Define hyperparameters

t = trial.suggest_int("t", low=1, high=10)

# Processing dependent on hyperparameters

time.sleep(t)

tuner = FunctionRuntimeTuner()

tuner.optimize(func, n_trials=10)

CommandRuntimeTuner

CommandRuntimeTuner optimizes command execution time. Users define a function that generates a command string and pass it as the first argument to the optimize function. If the command terminates abnormally, that trial is excluded from evaluation.

def command_generator(trial, **kwargs):

# Define hyperparameters

num_workers = trial.suggest_int("num_workers", low=1, high=10)

# Command dependent on hyperparameters

command = f"python train.py --num-workers {num_workers}"

return command

tuner = CommandRuntimeTuner()

tuner.optimize(command_generator, n_trials=10)

CommandOutputTuner

CommandOutputTuner executes the given command and optimizes specific values in the standard output. Users define two functions: one that generates a command string and another that extracts specific values from the standard output, passing them as the first and second arguments to the optimize function. The path to the standard output result file from command execution is provided as the first argument to the value extraction function, so please extract the cost value using string operations with regular expressions or file processing.

Note that if the command terminates abnormally or value extraction fails, that trial is excluded from evaluation.

def command_generator(trial, **kwargs):

# Define hyperparameters

num_workers = trial.suggest_int("num_workers", low=1, high=10)

# Command dependent on hyperparameters

command = f"python train.py --num-workers {num_workers}"

return command

def value_extractor(log_path):

# Open log_path and extract cost value

with open(log_path, "r") as f:

for line in f:

if "epoch_time=" in line:

result = re.findall(

r"epoch_time=(\d+\.\d+)", line

)[0]

epoch_time = float(result[0])

return epoch_time

tuner = CommandOutputTuner()

tuner.optimize(command_generator, value_extractor, n_trials=10)

GeneralTuner

GeneralTuner optimizes user-defined objective functions.

def objective(trial, **kwargs):

# Define hyperparameters

x = trial.suggest_int("x", low=-10, high=10)

# Processing dependent on hyperparameters

y = func(x)

# Determine cost value

cost_value = y**2

return cost_value

tuner = GeneralTuner()

tuner.optimize(objective, n_trials=10)

3. Execute Tuning

When you run a tuning script using ZenithTune (let's call it optimize.py), it outputs the cost value and parameters for each trial. Users can obtain the best values and feed them back to the original tuning target.

$ python optimize.py

2025-07-18 08:08:53,545 - zenith-tune - INFO - Using distributed launcher: None

[I 2025-07-18 08:08:54,103] A new study created in RDB with name: study_20250718_080853

[I 2025-07-18 08:08:54,185] Trial 0 finished with value: 41.0 and parameters: {'x': 4, 'y': -5}. Best is trial 0 with value: 41.0.

[I 2025-07-18 08:08:54,222] Trial 1 finished with value: 85.0 and parameters: {'x': -6, 'y': -7}. Best is trial 0 with value: 41.0.

[I 2025-07-18 08:08:54,258] Trial 2 finished with value: 5.0 and parameters: {'x': 2, 'y': -1}. Best is trial 2 with value: 5.0.

[I 2025-07-18 08:08:54,293] Trial 3 finished with value: 64.0 and parameters: {'x': 8, 'y': 0}. Best is trial 2 with value: 5.0.

[I 2025-07-18 08:08:54,328] Trial 4 finished with value: 5.0 and parameters: {'x': -1, 'y': -2}. Best is trial 2 with value: 5.0.

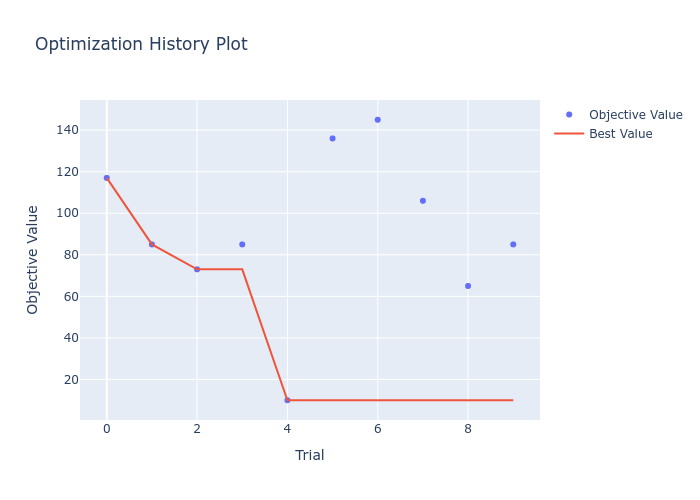

4. Analyze Tuning Results

It is important to analyze tuning results, such as whether tuning has properly converged and how important each hyperparameter is to the cost value. In ZenithTune, you can call the analyze function after executing the optimize function to aggregate and output various analysis methods by Optuna.

tuner = GeneralTuner()

tuner.optimize(objective, n_trials=10)

tuner.analyze()

From the standard output of the analyze function, you can confirm the initial value/parameters, optimal value/parameters, and improvement rate from the initial value.

2025-07-18 08:18:30,076 - zenith-tune - INFO - First trial: value=117.0, params={'x': 9, 'y': 6}

2025-07-18 08:18:30,079 - zenith-tune - INFO - Best trial: trial_id=5, value=10.0, params={'x': -1, 'y': -3}

2025-07-18 08:18:30,079 - zenith-tune - INFO - Improvement rate from first: 11.7

Additionally, the following analysis results are output to the working directory containing tuning results (default is under ./outputs/):

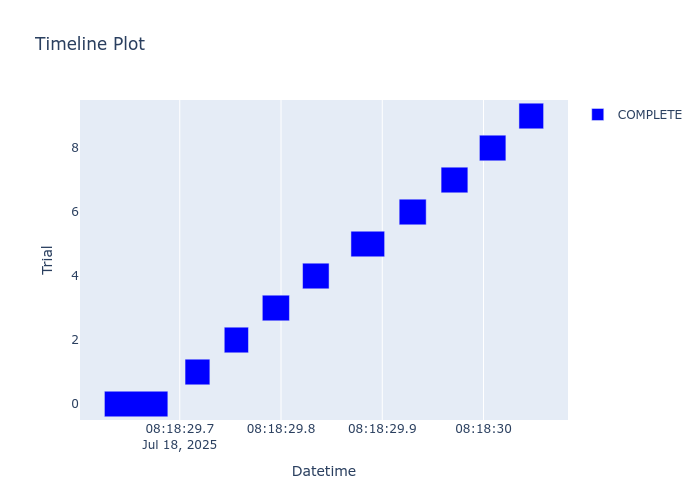

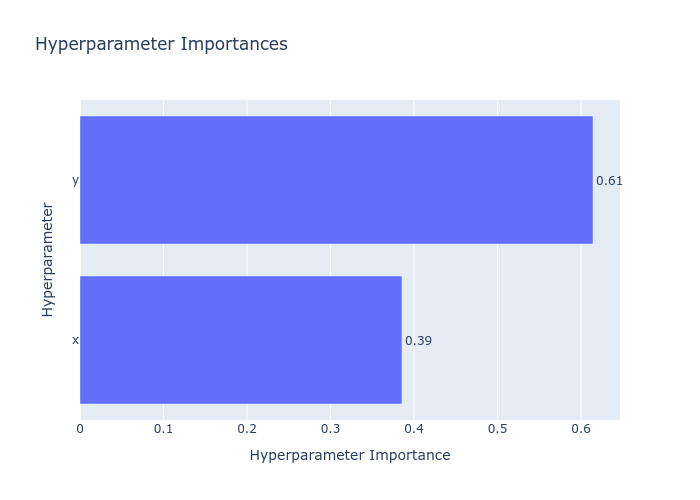

timeline.png: Time spent on each trial on real timehistory.png: Progress of cost values during trial processimportances.png: Importance of each tuning parameter

Check whether each trial is executed properly and whether there are parameters that clearly should not be tuned, and modify the tuning script as necessary. Tuning is complete when tuning has progressed sufficiently and the Best Value has converged.