Advanced Tuning

This document explains advanced tuning methods using ZenithTune.

Parallel Execution of Tuning Scripts

ZenithTune actively supports tuning applications that use multiple processes, such as distributed training. Since there are constraints on distributed execution methods depending on the computer system and job scheduler, please consider the following methods in order when executing tuning with ZenithTune for multi-process applications.

Method 1. Tuning Script: Single Process, Tuning Target: Multi-Process

This is the simplest example. Write a tuning script that runs in a single process as usual, and define a command that launches multiple processes in the command generation function.

This method is suitable when you can launch processes on multiple nodes from a single entry point.

def command_generator(trial, **kwargs):

num_workers = trial.suggest_int("num_workers", low=1, high=10)

command = f"mpirun --np 8 train.py --num-workers {num_workers}"

# command = f"torchrun --nproc-per-node 8 train.py --num-workers {num_workers}"

return command

tuner = CommandOutputTuner()

tuner.optimize(command_generator, value_extractor, n_trials=10)

Tuning execution method:

python optimize.py

Method 2. Tuning Script: Multi-Process, Tuning Target: Single Process

Depending on the job scheduler or cluster system, the system itself may serve as the entry point for multi-process execution, making it cumbersome for users to launch processes on multiple nodes from a single entry point.

In such cases, it is also possible to execute the tuning script itself in multiple processes and generate single-process commands.

Since the Tuner implements exclusive control and communication settings for multi-process execution, users can use standard distributed execution entry points (mpirun or torchrun) for tuning.

def command_generator(trial, **kwargs):

num_workers = trial.suggest_int("num_workers", low=1, high=10)

command = f"python train.py --num-workers {num_workers}"

return command

tuner = CommandOutputTuner()

tuner.optimize(command_generator, value_extractor, n_trials=10)

Tuning execution method:

$ mpirun --np 8 python optimize.py

# $ torchrun --nproc-per-node 8 python optimize.py

Method 3. Tuning Script: One Process per Node, Tuning Target: Multi-Process

In container-based distributed environments such as Kubernetes, you may need to write an entry point for each compute node. In this case, it is also possible to execute a single-process tuning script on each compute node, and write the command generation to launch a number of processes according to the parallelism of each compute node (e.g., number of GPUs).

def command_generator(trial, **kwargs):

num_workers = trial.suggest_int("num_workers", low=1, high=10)

command = f"torchrun --nproc-per-node 8 train.py --num-workers {num_workers}"

return command

tuner = CommandOutputTuner()

tuner.optimize(command_generator, value_extractor, n_trials=10)

Tuning execution method:

torchrun --nproc-per-node 1 python optimize.py

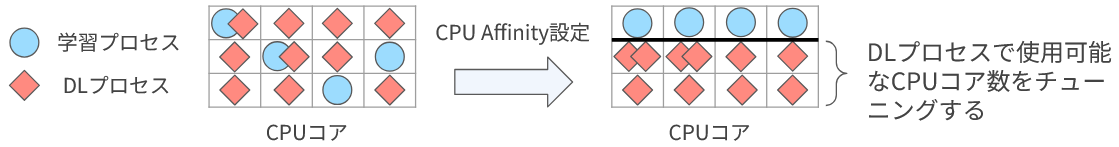

CPU Affinity Tuning

In distributed training, competition between training processes and data loader processes can reduce overall throughput. For this issue, appropriately limiting the CPU cores available to data loader processes can stabilize the processing performance of training processes and improve overall throughput. However, limiting the CPU cores available to data loader processes too much can delay data supply to the training itself, actually slowing it down. Therefore, tuning the number of CPU cores available to data loader processes may yield maximum throughput.

For this purpose, ZenithTune provides components for tuning the CPU affinity of data loader processes. The tuning procedure is as follows:

- Import

zenith_tune.tuning_component.dataloader_affinity.worker_affinity_init_fn - Set

worker_affinity_init_fnto theworker_init_fnof Torch-compliant data loader - Tune the

available_cpusargument ofworker_affinity_init_fn

worker_affinity_init_fn sets CPU affinity for its own data loader process according to the value of available_cpus.

By finding the optimal value of available_cpus, you can achieve the best performance.

This feature also supports the MMEngine registry.

By writing import zenith_tune in the MMEngine training script, you can reference the module as zenith_tune.worker_affinity_init_fn.

This integration allows you to forcibly override the data loader's worker_init_fn using MMEngine's --cfg-options.

python train.py --cfg-options train_dataloader.worker_init_fn.type=zenith_tune.worker_affinity_init_fn \

train_dataloader.worker_init_fn.available_cpus={available_cpus}

Limiting Training Data Sample Count

When executing tuning, each trial should be executed with high accuracy and compactness.

Typically, the number of epochs or iterations is set to an appropriate range according to the configuration methods of various frameworks, but when such adjustment functions are not supported, you can forcibly limit the number of data samples used for training using zenith_tune.tuning_component.LimitedSampler.

Provide the number of data samples to limit to limited_samples of LimitedSampler and specify it as the Sampler.