AcuiRT

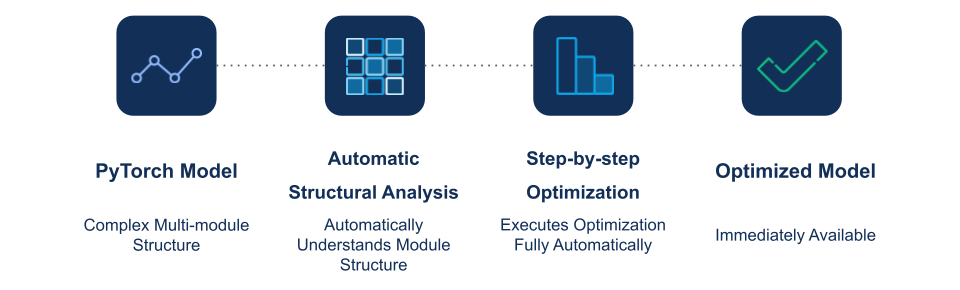

AcuiRT (Advanced Conversion UtIlities for Run-Time) is a model auto‑conversion library for fast inference of AI models using device‑specific deep learning compilers. By using AcuiRT, you can perform high‑speed model inference on actual hardware with deep learning compilers such as TensorRT.

Features

Improved Execution Time

Through model conversion with AcuiRT, optimizations such as kernel fusion, quantization, and kernel selection are applied to the model. These optimizations enable fast inference on target devices.

Reduced Development Time

When using deep learning compilers like TensorRT directly, you need to convert the entire model at once. However, for practical models of a certain scale, various constraints make it difficult to successfully convert the model in one attempt. This is because hardware-specific deep learning compilers are heavily dependent on supported operators and quantization methods, and traditionally required manual intervention such as configuration adjustments and model improvements.

AcuiRT implements a flexible conversion strategy that identifies the cause when some modules cannot be converted, converts only the convertible parts, and runs the remaining modules on PyTorch. As a result, manual module partitioning and trial-and-error become unnecessary, allowing users to focus on model design.

Additionally, AcuiRT includes observation features that clearly display modules that could not be converted and their causes. Using these features reduces the engineering effort required to convert the entire model for target devices, accelerating development.

Quick Start Guide

Conversion Tips

AcuiRT also supports manual module conversion in addition to automatic conversion.